CSE559A Lecture 9

Continue on ML for computer vision

Backpropagation

Computation graphs

SGD update for each parameter

is the error function.

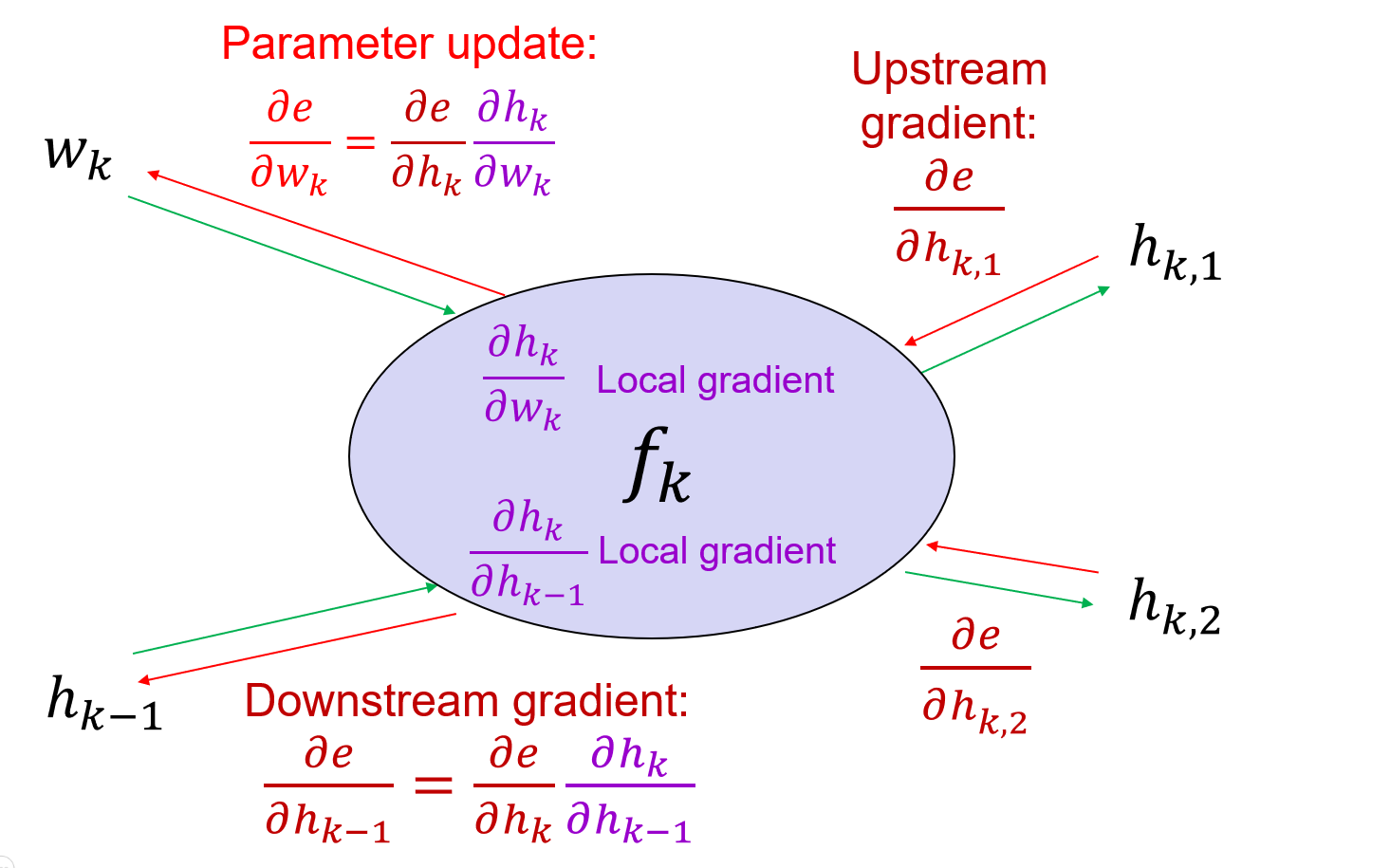

Using the chain rule

Suppose ,

Example:

So ,

For the general cases,

Where the upstream gradient is known, and the local gradient is known.

General backpropagation algorithm

The adding layer is the gradient distributor layer. The multiplying layer is the gradient switcher layer. The max operation is the gradient router layer.

Simple example: Element-wise operation (ReLU)

Where if and , otherwise .

When then (dead ReLU)

Other examples on ppt.

Convolutional Neural Networks

Basic Convolutional layer

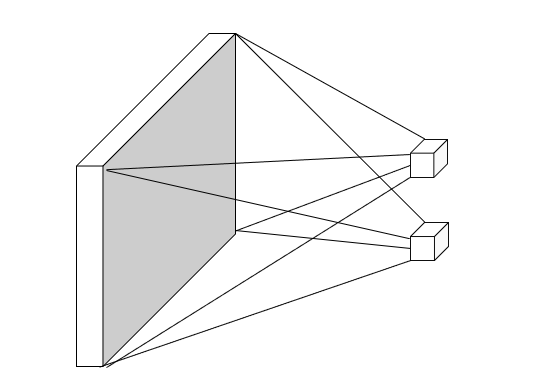

Flatten layer

Fully connected layer, operate on vectorized image.

With the multi-layer perceptron, the neural network trying to fit the templates.

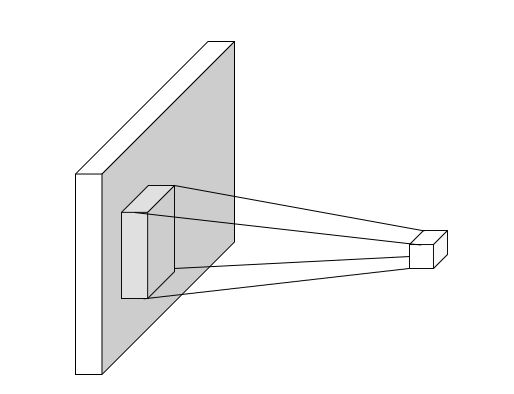

Convolutional layer

Limit the receptive fields of units, tiles them over the input image, and share the weights.

Equivalent to sliding the learned filter over the image , computing dot products at each location.

Padding: Add a border of zeros around the image. (higher padding, larger output size)

Stride: The step size of the filter. (higher stride, smaller output size)