CSE559A Lecture 22

Continue on Robust Fitting of parametric models

RANSAC

Definition: RANdom SAmple Consensus

RANSAC is a method to fit a model to a set of data points.

It is a non-deterministic algorithm that can be used to fit a model to a set of data points.

Pros:

- Simple and general

- Applicable to many different problems

- Often works well in practice

Cons:

- Lots of parameters to set

- Number of iterations grows exponentially as outlier ratio increases

- Can’t always get a good initialization of the model based on the minimum number of samples.

Hough Transform

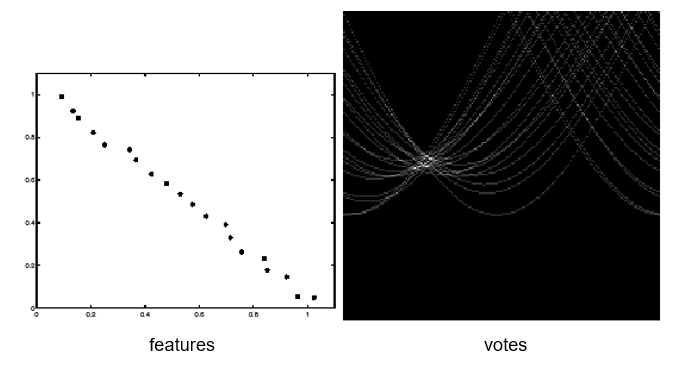

Use point-line duality to find lines.

In practice, we don’t use (m,b) parameterization.

Instead, we use polar parameterization:

Algorithm outline:

- Initialize accumulator to all zeros

- For each feature point

- For to

- For each feature point

- Find the value(s) of where is a local maximum (perform NMS on the accumulator array)

- The detected line in the image is given by

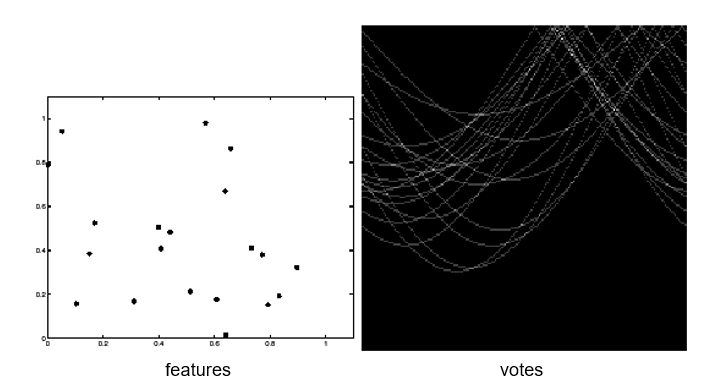

Effect of noise

Noise makes the peak fuzzy.

Effect of outliers

Outliers can break the peak.

Pros and Cons

Pros:

- Can deal with non-locality and occlusion

- Can detect multiple instances of a model

- Some robustness to noise: noise points unlikely to contribute consistently to any single bin

- Leads to a surprisingly general strategy for shape localization (more on this next)

Cons:

- Complexity increases exponentially with the number of model parameters

- In practice, not used beyond three or four dimensions

- Non-target shapes can produce spurious peaks in parameter space

- It’s hard to pick a good grid size

Generalize Hough Transform

Template representation: for each type of landmark point, store all possible displacement vectors towards the center

Detecting the template:

For each feature in a new image, look up that feature type in the model and vote for the possible center locations associated with that type in the model

Implicit shape models

Training:

- Build codebook of patches around extracted interest points using clustering

- Map the patch around each interest point to closest codebook entry

- For each codebook entry, store all positions it was found, relative to object center

Testing:

- Given test image, extract patches, match to codebook entry

- Cast votes for possible positions of object center

- Search for maxima in voting space

- Extract weighted segmentation mask based on stored masks for the codebook occurrences

Image alignment

Affine transformation

Simple fitting procedure: linear least squares Approximates viewpoint changes for roughly planar objects and roughly orthographic cameras Can be used to initialize fitting for more complex models

Fitting an affine transformation:

Only need 3 points to solve for 6 parameters.

Homography

Recall that

Use 2D homogeneous coordinates:

Reminder: all homogeneous coordinate vectors that are (non-zero) scalar multiples of each other represent the same point

Equation for homography in homogeneous coordinates:

Constraint from a match ,

How can we get rid of the scale ambiguity?

Cross product trick:

The cross product is defined as:

Let be the rows of . Then

Constraint from a match :

Rearranging the terms:

These equations aren’t independent! So, we only need two.

Robust alignment

Descriptor-based feature matching

Extract features Compute putative matches Loop:

- Hypothesize transformation

- Verify transformation (search for other matches consistent with )

RANSAC

Even after filtering out ambiguous matches, the set of putative matches still contains a very high percentage of outliers

RANSAC loop:

- Randomly select a seed group of matches

- Compute transformation from seed group

- Find inliers to this transformation

- If the number of inliers is sufficiently large, re-compute least-squares estimate of transformation on all of the inliers

At the end, keep the transformation with the largest number of inliers